main.cpp - all the

ray tracing code

assn2 - the entire studio

workspace

This ray tracer, as per the assignment,

performs antialiasing and soft shadows (i.e., area

light sources) through jittered supersampling.

As well, it does texture mapping and blurred

reflection. I will describe my implementation and

issues noted for each of these features

in the below.

I made the added functionality ray

tracer on top of a cut and pasted assemblage of

other code. Starting with the parser provided for

our class, I added the assignment 1 ray

tracer to the main cpp file. I then added targa

read and write code also provided our class.

To this, the additional functions were added one at a

time, since they are relatively

independent.

Jittered supersampling and area light sources:

Jittered supersampling allows for area

light sources and antialiased images. First shoot

samples on a finer grid than the final display pixel

size. Jitter them uniformly within the

smaller grid, and keep track of the weighting associated

with that sample, proportional to

the sample's deviation from a gaussian mean centered

at the display pixel center. For

each such ray, when a shadow is cast to an area light

source, jitter the shadow ray in

determining lighting. Once all rays have been computed,

for each display pixel compute

the weighted average of the samples within a reasonable

support of the pixel's gaussian.

Since multiple samples contribute to a pixel's final

color, the jaggies normally found

around edges in the scene look more straight. Also,

the jittering of shadow rays (which

creates uneven, salt-and-pepper shadows) gets evened

out and leaves penumbras, areas

partially in shadow, as seen below.

In the image on the left, aliasing artifacts can be seen along the

teapot's lid and along

shadows in the scene. The image on the right was created with

16 samples per pixel

and downsized with a gaussian filter (standard deviation about .6 pixel).

Notice that

many of the left image's artifacts are blurred away in the right.

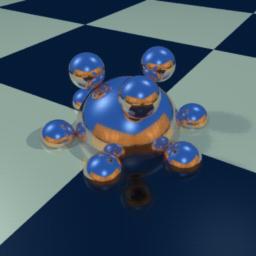

Images clockwise. The light creating the shadow in the lower

left of each image is an area light source.

At one sample per pixel, you can see clearly the speckle on the shadow

boundry and also on the

specular highlight of the light source on the balls. The images

then follow with 4 samples, 9 samples,

and 16 samples per pixel. All latter three are filtered with

a gaussian, standard deviation .5 pixel width.

We end up with almost unnoticed speckle in the final image. Notice

the the number of samples per

pixel that should be shot to reduce the speckle is directly proportional

to the thickness of the partially lit

boundary, or penumbra.

I ran into several important things

in implementing these functionalities. First, when

samples over a area are being averaged for final display,

it is vital to energy conservation

to divide the computed weighted average by the sum of

the weights used during the

averaging. Without this, I can get uneven darkness

in the images. Second, the

reasonable support over which to compute this weighted

average I hard code in as three

display pixels. So I am essentially convolving

with a gaussian over nine-pixel areas for

each pixel. I may be losing some of the energy

not counted as the gaussian slips away

to infinity, but that is what dividing by the sum of

weights also solves.

Texture Mapping:

There are only a few things that need

to be done for texture mapping once the

texture file input/output has been figured out (code

that was provided for our class).

First, for ray triangle intersections, I used the algorithm

presented by Brian in class,

from Tomas Möller, which computes the barycentric

coordinates of an intersection.

These coordinates (a third being the sum subtracted from

1) can be used to bilinearly

interpolate between values (colors, normals, etc.) at

the triangle vertices.

For texture mapped triangles, u v

coordinates are provided for each vertex,

referencing places on the image being textured.

For every triangle intersection that I

compute lighting for, simply multiply the material properties

(ambient, diffuse,

specular) by the image color of the triangle at that

spot, and proceed with the lighting.

This added very little additional computation: I bilinearly

interpolate between the vertex

u v coordinates to get the image color at the ray triangle

intersection point. One could

binlinearly interpolate between the image colors surrounding

the image coordinate

needed. I just get the color at (floor(u),floor(v))

and use that, letting the supersampling

take care of blending. This may be better though,

in order to avoid blurring the image

twice (once for interpolation, again during the downsizing

stage).

Blurred Reflection:

Blurred reflection (and refraction)

is jittering the transmited ray in order to obtain a

truer color for a less than perfectly specular surface.

I do this by sampling a gaussian

when determining reflection and transmision rays.

The relfection ray is normalized,

and I look at a gaussian on a plane whose normal is the

ray. I then create a vector by

which to perturb the ray, and renormalize. The

color returned by this ray is weighted

by it's distance on the gaussian plane to the perfect

reflection ray. A hard coded

number of such rays are shot and a weighted average computed.

The perfect relection

ray is always shot, which doesn't bias the final computation

too much if there are

enough rays shot, and it makes the image look less noisy

for large gaussians.

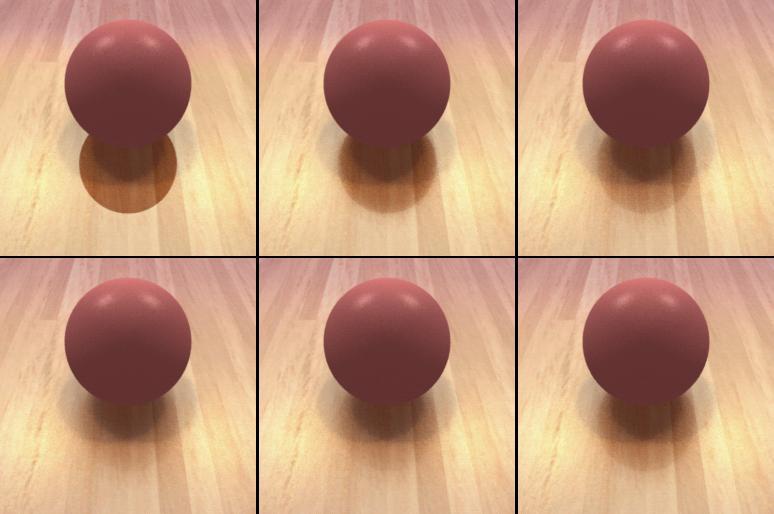

Images clockwise. We start with a slightly reflective texture

mapped surface, with a ball placed on

top. Only the exact reflection vector is computed. Then

a gaussian reflection perturbation of as much

as .5 (or about 14 degrees if at the end of a unit vector), with

11 reflection rays computed. Third is

1.5 perturbation (36 degrees), 11 samples. Fourth is the same,

but with 21 samples. Finally, 2.5

possible perturbation (51 degrees), 40 samples, and 80 samples.

Notice the trend towards more

glossy and less mirrorlike reflection. Compare the last of this series

with the shiny wood floor of the

teapot picture at the top of this page.

There are issues I dealt with for blurred

reflections. With a more in functional

parser implementation, the glossiness (the extent of

the gaussian) would be an object

specific property. I have actually included it

as such, but the parser does not handle

sigma (standard deviation) as a surface property.

Also, blurred reflection

exponentially increases the complexity of a rendering

if the scene is complex enough.

That's why such a simple one level recursion is used

in the above series and not in a

reflective teapot scene like that at the top of this

page.

Conclusions:

Wow, this is cool! With more

time, I would implement contrast driven adaptive

supersampling, depth of field, and texture mapping on

other primitives (prisms and

so on). If I had a few more billion FLOPS, I would

be making movies right now.